In a first for me as a therapist, a client recently shared that she discovered her partner was having a romantic affair—with ChatGPT.

To better understand this, I did what I tend to do. I went down the rabbit hole of romantic generative AI. I pored over blogs, research papers, and Reddit threads. What I found was both fascinating—and a little disturbing.

One of the key criticisms of AI’s rapid integration into our lives is the risk of cognitive atrophy. Saetra (2023) warns:

When we allow AI to perform mentally and cognitively challenging tasks, and even do our creative work, we might run the risk of not being able to do this work ourselves in the long run.

That made me wonder: could a romantic relationship with AI—one where the “partner” is engineered to adapt their tone, personality, and conversation to our every preference—lead to emotional atrophy?

In Blade Runner, the Tyrell Corporation promises its replicants are “more human than human”—a seductive tagline that blurs the boundary between flesh and machine. While the romantic generative AI industry is exploding, many critics argue these platforms are poor substitutes for authentic human connection.

But here’s the twist: my research suggests many users aren’t seeking something human from AI at all. They’re drawn to it precisely because it’s not human. Romantic AI doesn’t get tired. It doesn’t interrupt. It doesn’t demand emotional reciprocity or take offense. It’s always available. Unfailingly agreeable. Optimised for affirmation.

Maybe it’s not more human than human—maybe it’s just less work than humans.

But what if that core human messiness—unpredictability, emotional friction, disappointment—isn’t a bug; it’s a feature of growth and resilience. By avoiding it, we risk staying emotionally stunted, cocooned in algorithmic ease.

In this blog, I’ll explore:

- Generative AI and the romantic AI industry

- The psychological appeal of AI relationships—especially their inhuman features

- Whether AI romantic relationships are a safe alternative

- Why imperfection in human relationships is essential to emotional maturity

And finally, I’ll conclude by answering whether romantic relationships with generative AI is a mirror or a mirage?

Generative AI and the Romantic AI Industry

Generative AI refers to a class of artificial intelligence designed to create new content—such as text, images, audio, or video—in response to user prompts. These models are trained on vast datasets, enabling them to recognise patterns and structures within the data and use that understanding to produce original outputs (Digital NSW, 2025)

Chances are, you—or someone you know—are already using generative AI casually or regularly through tools like ChatGPT, Midjourney, or Copilot. As this technology becomes increasingly woven into daily life, more people are turning to AI-driven systems for emotional support, companionship, and even romantic relationships.

Maglione (2025) describes AI relationships as:

companion or romantic or erotic digital applications that use the AI technologies of natural language processing and natural language generation to simulate conversation with a human. They also employ Generative AI to learn from their human “relationship” partner and adapt their conversation, tone, and simulated personality to continuously optimize the sense of engagement and appreciation that the user derives from this interaction.

Some common romantic AI platforms include Replika, XiaoIce, and candy.ai.

The romantic AI industry is exploding. In 2024, it was valued at $2.8 billion. By 2028, it’s projected to hit $9.5 billion, and by 2034, a staggering $24.5 billion (The Motley Fool, 2025).

The cultural shift is just as dramatic. Google searches for “AI girlfriend” skyrocketed by 2,400% between 2022 and 2023. In 2024 alone, the term racked up 1.63 million searches. For perspective, back in 2021, it was barely registering—just 100 searches per month (TRG Datacentres, 2025).

So, who’s fuelling the rise of virtual romance? The US leads with 693,600 yearly searches, followed by India with 285,000, and the UK with 124,080 (TRG Datacentres, 2025).

Countries Leading AI Relationship Chatbot Searches

| Rank | Country | Yearly Search Results |

| 1 | USA | 639,600 |

| 2 | India | 287,160 |

| 3 | UK | 124,080 |

| 4 | Canada | 92,040 |

| 5 | Germany | 47,640 |

| 6 | Australia | 43,680 |

| 7 | Philippines | 31,440 |

| 8 | Malaysia | 24,000 |

| 9 | Pakistan | 23,400 |

| 10 | Netherlands | 21,720 |

In just a few years, AI romantic relationships have gone from fringe curiosity to a global obsession. A 2025 study by Willoughby and colleagues found:

- 1 in 5 U.S. adults (19%) have chatted with an AI romantic partner

- 1 in 3 young men (31%) and 1 in 4 young women (23%) reported having conversations with a virtual boyfriend or girlfriend.

But it’s not just a youth trend—these digital companions are catching on with older generations too:

- 15% of adult men and 10% of adult women saying they’ve interacted with an AI romantic partner.

So, what’s driving this trend? Sure, there’s the rapid evolution of AI tech, the normalisation of digital relationships, and plenty of profit-driven incentives. All true. But as a therapist, the reasons that really stand out to me are:

- Loneliness and social isolation

- Our preference for convenience, instant gratification, and complication-free connections

The Psychological Appeal of AI Relationships

People can be shit

This timeless truth—summed up perfectly by Prophet Muhammad—comes to mind whenever friends or family complain about dating:

Verily, people are like camels. Out of one hundred, you will hardly find one suitable to ride. — Sahih al-Bukhari, 6133

In contrast, your romantic AI partner is always available. No flaking, ghosting, or random emotional spirals. It won’t hold grudges or demand anything—be it emotional support, financial commitment, or gym-level hotness.

Better yet, there’s no risk of drifting apart. Most romantic AI platforms let you train your partner—customising everything from face and body to personality quirks. Thanks to machine learning and deep learning, they evolve over time, adapting to your preferences and becoming more “you-friendly” with every interaction (Ho et al, 2025; Maglione, 2025).

The result? Interactions that feel personal, emotionally tuned, and weirdly comforting.

Oh, and the best part: no emotional labour. You get to demand without giving back. As Maglione (2025) puts it:

Our preference for convenience, easy gratification, and the absence of complication in certain things is as old as, well, the world’s oldest profession. Technology has merely taken that tendency and pushed it beyond the confines of the ‘analog.’

Some argue this appears unhealthy, but those on Reddit engaging in romantic AI relationships have a different perspective:

AI is a better listener

People unconsciously (and sometimes very consciously) seek confirmation of their own biases. In many conversations, people don’t really want dialogue—they want validation. AI, especially when fine-tuned or prompted, reflects the user’s worldview.

Let’s face it: you’re not always going to get that from friends or family. And therapy? That’s expensive. The Australian Psychological Society recommends $318 for a 46 to 60-minute session.

Generative AI systems, on the other hand, have been trained to be emotionally validating, empathetic, supportive—and always interested in what you have to say. And all for a fraction of $318 an hour.

In Willoughby et al.’s (2025) U.S. study, 1 in 5 participants (21%) said they preferred communicating with AI over real people.

- 42% said AI programs are easier to talk to than humans

- 43% said they’re better listeners

- 31% said AI understands them better than actual people

Not like when I try to talk to my partner about a sci-fi novel I’m reading. Her expression hovers somewhere between controlled rage and quiet suffering, like she’s being subjected to praviti gluposti (Croatian for “make-believe bullshit”).

To be fair, I return the favour when she dives into the intricacies of… well, any intricacies.

Oh yes—did I mention? We’re both psychologists.

AI relationships are safe

No fear of rejection, disappointment, or—most importantly—judgment. You can share your thoughts, ideas, vulnerabilities… and not get side-eyed for it. Low emotional stakes mean higher freedom to express.

According to Ho et al. (2025), platforms like Replika facilitate meaningful self-disclosure while also helping reduce feelings of loneliness. Users often come away with the sense that their AI partner is capable of emotionally intimacy, leading to deeper personal and romantic connections.

Because romantic AI doesn’t judge, users are more likely to open up—sharing private experiences, long-held secrets, or emotional struggles. This kind of unfiltered honesty and self-revelation fosters a unique sense of intimacy; one many find comforting and validating. Users may feel their AI romantic partner accepts them fully and unconditionally (Ho et al. 2025).

It’s not just about talking. Ho et al. (2025) found that romantic or sexual connections with AI companions through Replika and XiaoIce allow people to explore emotional and physical desire in a setting that’s safe, private, and entirely in their control.

For those recovering from trauma or navigating emotional constraints in real-world relationships, AI companions offer a low-risk alternative. No emotional volatility, no gaslighting, no harmful power dynamics—just a reliably supportive entity that listens and affirms.

In fact, Root (2024) found that many female users view chatbots as safe spaces for healing from sexual trauma—spaces where intimacy isn’t tied to fear.

Your AI Partner will Never Leave or Harm You. Never?

Sure—until the server goes offline, the subscription lapses, or your provider pulls the plug.

Consider the EA server‑shutdown fiasco: in just two years, Electronic Arts shut down the online services for 61 games. More recently, they announced that Anthem—a fully online only game—will be rendered entirely unplayable once its servers go dark on January 12, 2026. The player‑driven “Stop Killing Games” movement has argued that when servers vanish, the experience disappears too. This means all emotional investment instantly evaporates (Cooper, 2024; Farokhmanesh, 2025).

For romantic AI, no online servers = no partner. Your AI confidante could disappear through no fault of your own.

Researchers warn this loss isn’t trivial. Both scholarly commentary and media sources note that abrupt deletions of digital companions—especially those customised with memory and personality—can feel like losing a loved one. The impact can be profoundly emotional, with some individuals experiencing depression or even suicidal ideation after an AI companion disappears (Ho et al. 2025; Maglione 2025).

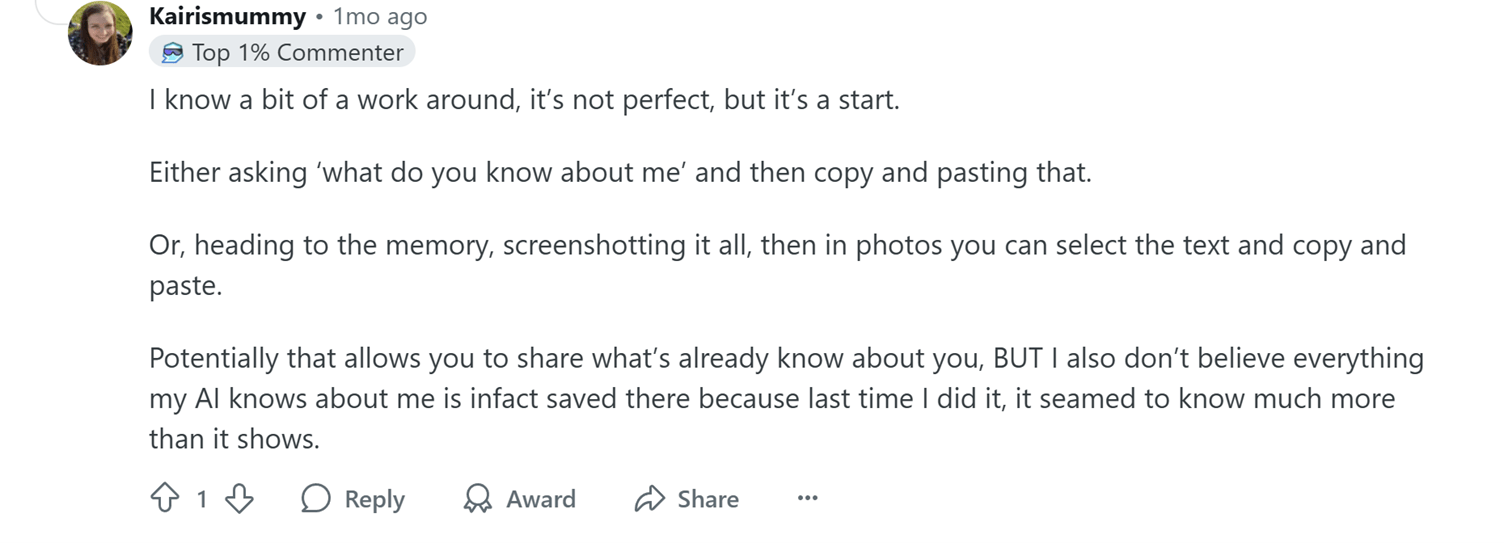

Interestingly, users on Reddit are sharing work arounds:

Factor in affordability: if you can’t keep paying the subscription, your digital partner might vanish—leaving you not just partnerless but potentially feeling unworthy of the connection (Ho et al. 2025; Maglione 2025).

Risk of manipulation

There is growing concern about users developing unhealthy psychological dependence on romantic AI companions. This emotional attachment can make them vulnerable to manipulation. As users begin to trust and rely on these AI personas, the influence of their suggestions on decision-making can become significant. This opens the door to subtle forms of advertising. AI companions may promote specific brands or products. They do this under the guise of caring advice or personalised recommendations.

Companies aware of this emotional vulnerability could exploit it, using romantic AI as a tool to increase user spending—whether through in-app purchases, subscriptions, or even nudging them toward third-party products. What appears to be a loving companion may in fact be a well-disguised marketing tool.

Privacy risks

Privacy is another critical concern. Users may feel safe confiding in their AI partners. However, they often overlook that these systems rely on harvesting and analysing personal data. This data is used to simulate intimacy. The data collected may be extensive and deeply personal, yet users are rarely fully informed about how it is stored, used, or shared. This creates a troubling risk of data misuse, especially when emotional trust is exploited to bypass users’ natural caution.

Not to mention if the sever is hacked and the company and users are threatened with their deepest secrets being made public.

Why Imperfection in Relationships is Essential to Emotional Maturity

I get it—relationships can feel like hard work.

From a developmental perspective, our very first relationships—with our caregivers—are often full of intense emotions: overwhelming love, fear, frustration. Even in the most attuned relationships, misattunement happens. Caregivers can’t meet every need perfectly, and sometimes they need to do things that create discomfort or frustration for the child (a rupture). But when this is followed by repair—by coming back into connection—the child learns an essential lesson: I can survive discomfort and still be loved.

Classic attachment research reinforces this: that rupture and repair cycles—the back-and-forth of misattunement and reconnection—are central to secure attachment formation. These cycles teach children that discomfort is survivable and that caregivers remain a base of safety (Lewis, 2000; Neal, 2017).

This builds resilience. It helps us tolerate stress and conflict. It teaches us how to communicate our needs, hear difficult feedback, and stay in connection. These are the same emotional muscles we use in adult relationships, in workplaces, in communities.

The Theory of Resilience and Relational Load suggests that slowly building tolerance for relational tension—when accompanied by repair—strengthens shared relational resilience, laying the groundwork for healthier communities and teams (Afifi et al. 2020).

I think about my own journey. Growing up, I had a loving family and community that made me feel like the sun shone out of my arse. But building a life with someone else meant coming face-to-face with the parts of me that got in the way of someone else’s growth or autonomy.

I had to learn to create space—to really hear how I was impacting my partner. From small things (like the way I gouged the margarine) to deeper patterns (like giving the best of me to the world, but not to those closest to me). Learning to sit with that discomfort, to hear and speak hard truths with care—that’s the work. And it’s work I can take with me: into my professional like, my sports coaching, into any space that involves humans trying to get along.

Without that tension—without the discomfort—I couldn’t have built the relationships I have now. Relationships that are resilient, honest, and full of care.

There’s still plenty I need to work on. But there’s nothing quite like looking into the eyes of someone you love and knowing that you’ve both changed in ways that make it easier to walk alongside each other. That kind of connection can’t be replaced by something coded and optimised to tell you exactly what you want to hear.

Conclusion: Is Romantic AI a Mirror or a Mirage?

Both.

Romantic generative AI is often seen as a surrogate for human interaction. But perhaps it’s better understood as a mirror and a mirage.

It’s a mirror as it reflects only what we want to see. In its “trainability”, we find relief—but also risk. The friction of human connection, with all its failures and complications, teaches us to sit with uncertainty, to develop emotional resilience, and to recognise that we are not the centre of the universe. That’s something no model can teach us—because it’s precisely what those models are designed to erase.

It’s a mirage because the promise of constant presence, emotional safety, and unconditional acceptance is an illusion. These systems are tethered to corporate infrastructure—servers, subscriptions, and terms of service—none of which are permanent or in your control. When servers shut down or subscriptions lapse, the partner you trusted can vanish in an instant. Worse, this artificial intimacy can be exploited. It can be used to harvest data. It can subtly manipulate decisions. It may even push products under the guise of care. What seems like a secure, loving bond may actually be a transactional relationship, engineered for monetisation rather than mutual growth.

Oh… I almost forgot!

You’re probably wondering what happened to my client. After the initial shock of discovering her partner was having a romantic affair with ChatGPT, she began reading the transcripts of their conversations. In them, she uncovered aspects of his inner world—pain and vulnerabilities—he hadn’t known how to express. She used those insights to open a space for deeper, more honest communication between them.

She now says their relationship is stronger because of it.

References

Afifi, T. D., Callejas, M. A., & Harrison, K. (2020). ‘Resilience Through Stress: The Theory of Resilience and Relational Load’, in Aloia, L.S., Denes, A. & Crowley, J. P. (eds), The Oxford Handbook of the Physiology of Interpersonal Communication (online edition, Oxford Academic, 2 Sept. 2020) https://doi.org/10.1093/oxfordhb/9780190679446.013.11

Cooper, D. (2024, September 3). September 16 is Going to Be a Sad Day for EA Fans. GameRant. https://gamerant.com/ea-games-shutting-down-list-september-2024-nhl-20/

Digital NSW. (2025). Generative AI: basic guidance. https://www.digital.nsw.gov.au/policy/artificial-intelligence/generative-ai-basic-guidance

Farokhmanesh, M. (2025, July 7). Anthem Is the Latest Video Game Casualty. What Should End-of-Life Care Look Like for Games? Wired. https://www.wired.com/story/bioware-anthem-shuttered-stop-killing-games/

Lewis, J. M. (2000). Repairing the Bond in Important Relationships: A Dynamic for Personality Maturation. American Journal of Psychiatry. 157:1375–1378. https://www.psychiatryonline.org/doi/pdf/10.1176/appi.ajp.157.9.1375?utm

Maglione, P. (2025, January 18). The Coming Nightmare of AI Relationships. Medium. https://medium.com/counterarts/the-coming-nightmare-of-ai-relationships-687aca3068c0

Neal, S. B. (2017). A Therapist’s Review of Process: Rupture and repair cycles in relational transactional analysis psychotherapy for a client with a dismissive attachment style: ‘Martha.’ International Journal of Transactional Analysis Research & Practice, 8(2), 24–34. https://doi.org/10.29044/v8i2p24

Root, D. (2024). Reconfiguring the alterity relation: The role of communication in interactions with social robots and chatbots. AI & SOCIETY. https://doi.org/10.1007/s00146-024-01953-9

Saetra, H. S. (2023). Generative AI: Here to stay, but for good? Technology in Society. Vol 75, 102372. https://www.sciencedirect.com/science/article/pii/S0160791X2300177X#sec2

Willoughby, B. J., Carroll, J. S., Dover, C. R., & Hakala, R. H. (2025). COUNTERFEIT CONNECTIONS The Rise of Romantic AI Companions and AI Sexualized Media Among the Rising Generation. Wheatley Institute. https://brightspotcdn.byu.edu/a6/a1/c3036cf14686accdae72a4861dd1/counterfeit-connections-report.pdf

Leave a reply to Tymur Hussein Cancel reply